I'm by no means an expert in this field, so my take is going to be less than professional. But my impression is that although the Bayesian/Frequentist debate is interesting and intellectually fun, there's really not much "there" there...a sea change in statistical methods is not going to produce big leaps in the performance of statistical models or the reliability of statisticians' conclusions about the world.

Why do I think this? Basically, because Bayesian inference has been around for a while - several decades, in fact - and people still do Frequentist inference. If Bayesian inference was clearly and obviously better, Frequentist inference would be a thing of the past. The fact that both still coexist strongly hints that either the difference is a matter of taste, or else the two methods are of different utility in different situations.

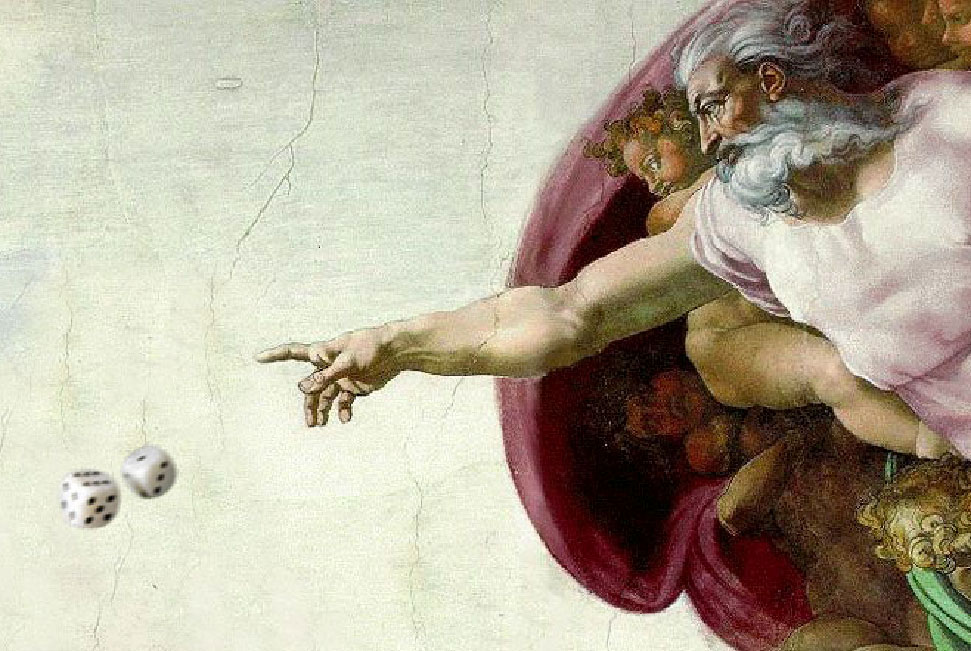

So, my prior is that despite being so-hip-right-now, Bayesian is not the Statistical Jesus.

I actually have some other reasons for thinking this. It seems to me that the big difference between Bayesian and Frequentist generally comes when the data is kind of crappy. When you have tons and tons of (very informative) data, your Bayesian priors are going to get swamped by the evidence, and your Frequentist hypothesis tests are going to find everything worth finding (Note: this is actually not always true; see Cosma Shalizi for an extreme example where Bayesian methods fail to draw a simple conclusion from infinite data). The big difference, it seems to me, comes in when you have a bit of data, but not much.

When you have a bit of data, but not much, Frequentist - at least, the classical type of hypothesis testing - basically just throws up its hands and says "We don't know." It provides no guidance one way or another as to how to proceed. Bayesian, on the other hand, says "Go with your priors." That gives Bayesian an opportunity to be better than Frequentist - it's often better to temper your judgment with a little bit of data than to throw away the little bit of data. Advantage: Bayesian.

BUT, this is dangerous. Sometimes your priors are totally nuts (again, see Shalizi's example for an extreme case of this). In this case, you're in trouble. And here's where I feel like Frequentist might sometimes have an advantage. In Bayesian, you (formally) condition your priors only on the data. In Frequentist, in practice, it seems to me that when the data is not very informative, people also condition their priors on the fact that the data isn't very informative. In other words, if I have a strong prior, and crappy data, in Bayesian I know exactly what to do; I stick with my priors. In Frequentist, nobody tells me what to do, but what I'll probably do is weaken my prior based on the fact that I couldn't find strong support for it. In other words, Bayesians seem in danger of choosing too narrow a definition of what constitutes "data".

(I'm sure I've said this clumsily, and a statistician listening to me say this in person would probably smack me in the head. Sorry.)

But anyway, it seems to me that the interesting differences between Bayesian and Frequentist depend mainly on the behavior of the scientist in situations where the data is not so awesome. For Bayesian, it's all about what priors you choose. Choose bad priors, and you get bad results...GIGO, basically. For Frequentist, it's about what hypotheses you choose to test, how heavily you penalize Type 1 errors relative to Type 2 errors, and, most crucially, what you do when you don't get clear results. There can be "good Bayesians" and "bad Bayesians", "good Frequentists" and "bad Frequentists". And what's good and bad for each technique can be highly situational.

So I'm guessing that the Bayesian/Frequentist thing is mainly a philosophy-of-science question instead of a practical question with a clear answer.

But again, I'm not a statistician, and this is just a guess. I'll try to get a real statistician to write a guest post that explores these issues in a more rigorous, well-informed way.

Update: Every actual statistician or econometrician I've talked to about this has said essentially "This debate is old and boring, both approaches have their uses, we've moved on." So this kind of reinforces my prior that there's no "there" there...

Update 2: Andrew Gelman comments. This part especially caught my eye:

Update 3: Interestingly, an anonymous commenter writes:

Update 4: A commenter points me to this interesting paper by Robert Kass. Abstract:

Update: Every actual statistician or econometrician I've talked to about this has said essentially "This debate is old and boring, both approaches have their uses, we've moved on." So this kind of reinforces my prior that there's no "there" there...

Update 2: Andrew Gelman comments. This part especially caught my eye:

One thing I’d like economists to get out of this discussion is: statistical ideas matter. To use Smith’s terminology, there is a there there. P-values are not the foundation of all statistics (indeed analysis of p-values can lead people seriously astray). A statistically significant pattern doesn’t always map to the real world in the way that people claim.

Indeed, I’m down on the model of social science in which you try to “prove something” via statistical significance. I prefer the paradigm of exploration and understanding. (See here for an elaboration of this point in the context of a recent controversial example published in an econ journal.)

Update 3: Interestingly, an anonymous commenter writes:

Whenever I've done Bayesian estimation of macro models (using Dynare/IRIS or whatever), the estimates hug the priors pretty tight and so it's really not that different from calibration.

Update 4: A commenter points me to this interesting paper by Robert Kass. Abstract:

Statistics has moved beyond the frequentist-Bayesian controversies of the past. Where does this leave our ability to interpret results? I suggest that a philosophy compatible with statistical practice, labeled here statistical pragmatism, serves as a foundation for inference. Statistical pragmatism is inclusive and emphasizes the assumptions that connect statistical models with observed data. I argue that introductory courses often mischaracterize the process of statistical inference and I propose an alternative "big picture" depiction.

Tidak ada komentar:

Posting Komentar